Testing

Functional, Non-functional and Process testing | Guidance notes

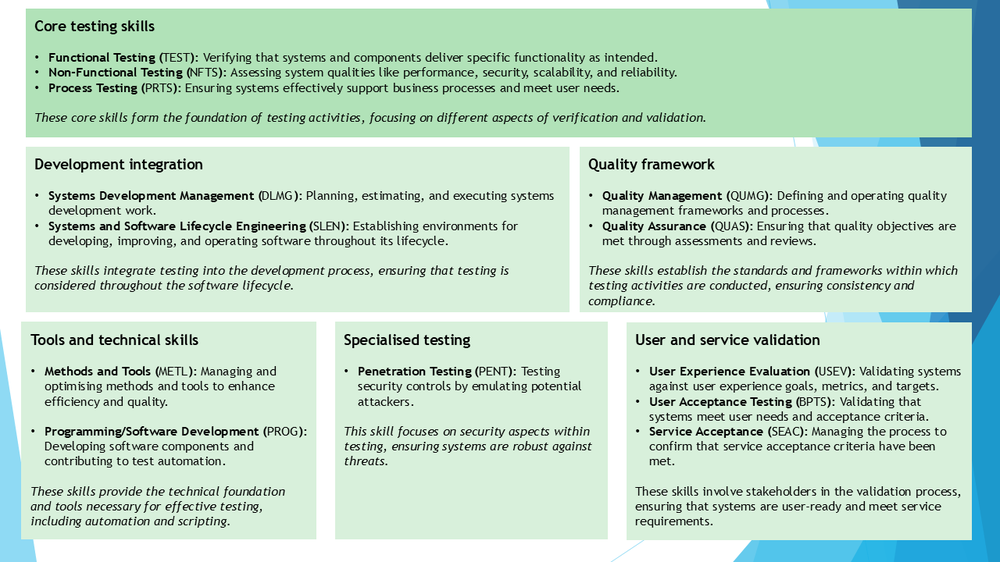

The update aligns the framework with contemporary working practices, tools, roles, career paths, and soft skills required for the testing profession.

- Overview of changes to the core Testing skills

- Detailed Explanation of the Three Testing Skills

- Greater emphasis on business skills/behavioural factors in SFIA 9

- the importance of business skills/behavioural factors, such as planning, decision-making, communication and collaboration, across all levels of responsibility.

- Frequently Asked Questions (FAQ)

- addressing test automation, test planning, design, analysis, testing tools and techniques

- An overview of all testing-related skills in SFIA 9

- such as those for devops, quality assurance, user acceptance, pen testing, usability, service acceptance

The updated testing skills framework includes the following key changes:

- Refined and restructured definitions and descriptions of Testing skills

- including process testing, functional testing, and non-functional testing

- Improved alignment of testing skills with SFIA levels, providing clearer guidance for career progression

- Greater visibility of the importance of soft skills, such as communication and collaboration, across all levels

- Recognition of diverse entry points into testing roles, including transitions from business operations

- Acknowledgement of the growing importance of test automation and agile testing practices

Overview of Changes in SFIA 9 Testing Skills

In previous versions of SFIA, testing was represented by a single skill (TEST) that encompassed almost all types of systems and software testing. This broad categorisation gave the false impression that all testing is similar and that proficiency in one aspect implies equal proficiency in all aspects. This misconception can hamper successful delivery by failing to recognise the different mix of testing skills needed in specific organisational or project contexts.

To address this issue, SFIA 9 has redefined and restructured the testing skills framework by introducing three distinct skills:

These skills focus on specific areas of testing, each with its own context, techniques, and areas of expertise.

Detailed Explanation of the Three Testing Skills

Functional Testing (TEST)

Definition: Functional Testing focuses on assessing specified or unspecified functional requirements and characteristics of products, systems, and services through investigation and testing.

Key Responsibilities:

- Understanding how software and systems are built to identify issues in their code.

- Verifying that the system performs the functions it is designed to do.

- Designing and executing test cases based on functional specifications and requirements.

Examples:

- Testing new features in a software application to ensure they work as intended.

- Verifying that a system correctly processes data inputs and produces expected outputs.

- Testing the calculations in a financial application to ensure accurate interest computations and transaction processing.

- Validating that a search function on a website returns relevant results based on user queries.

- Ensuring that a messaging system correctly sends, receives, and displays messages between users.

- Testing form validations to confirm that mandatory fields are enforced and data formats are correctly handled.

- Verifying that an online booking system accurately reflects availability and successfully processes reservations.

Non-Functional Testing (NFTS)

Definition: Non-Functional Testing focuses on assessing systems and services to evaluate performance, security, scalability, and other non-functional qualities against requirements or expected standards.

Key Responsibilities:

- Understanding system implementation aspects (e.g., cloud environments, networks) and required supporting qualities.

- Evaluating attributes such as performance, reliability, usability, and scalability.

- Ensuring that applications work effectively and efficiently in real-world contexts.

Examples:

- Conducting performance testing to ensure an application can handle expected user loads.

- Performing security testing to identify vulnerabilities in a system.

- Executing load testing on a web server to assess how it behaves under peak traffic conditions.

- Testing the system's recovery capabilities to verify it can recover from crashes or failures without data loss.

- Performing compatibility testing to ensure an application works across different browsers, operating systems, or devices.

- Conducting stress testing to determine the system's robustness and error-handling under extreme conditions.

- Testing the scalability of a cloud-based service to confirm it can handle increased workloads by allocating additional resources as needed.

- Measuring response times and throughput to ensure the system meets performance benchmarks.

Process Testing (PRTS)

Definition: Process Testing focuses on assessing documented and undocumented process flows within a product, system, or service against business needs through investigation and testing.

Key Responsibilities:

- Identifying, investigating, and clarifying necessary business behaviours that a system must enable.

- Ensuring that the system meets the organisation's business requirements and processes.

- Collaborating with stakeholders to validate that the system supports required business workflows.

Examples:

- Testing business processes during the implementation of a commercial off-the-shelf application to ensure it meets specific organisational needs.

- Validating process flows in enterprise resource planning (ERP) systems to align with company operations.

- Assessing the end-to-end order fulfilment process in an e-commerce platform to ensure smooth operation from order placement to delivery.

- Verifying that a customer relationship management (CRM) system supports the sales team's workflow, including lead management, follow-ups, and reporting.

- Testing the integration of a new human resources system to ensure it accurately handles recruitment, onboarding, and employee records management.

- Evaluating the effectiveness of a supply chain management system in coordinating procurement, inventory control, and logistics.

- Checking that a healthcare information system supports patient registration, appointment scheduling, and medical record management according to healthcare regulations.

Rationale for the Changes

Why Split Testing into Three Skills?

The division of testing into three distinct skills provides a pragmatic balance between broader applicability and specific focus. It recognises that:

- Different types of testing require different skills, techniques, and knowledge.

- High competency in one type of testing does not automatically translate to competency in another.

- Specialised skills add value independently and are critical to addressing specific project needs.

By defining these skills separately, SFIA 9 supports both specialists who focus on a particular type of testing and generalists who work across multiple testing domains.

How the Three Skills Differ

- Process Testing requires expertise in business processes and the ability to align system functionality with business needs.

- Functional Testing relies on technical knowledge of software and system architecture to validate functionality.

- Non-Functional Testing demands understanding of system implementation and focuses on performance and other quality attributes.

Each skill employs different techniques and adds unique value to projects, emphasising the need for specialised competencies.

Examples of Application

Implementing a Commercial Off-the-Shelf Application

-

- Process Testing is crucial to ensure the application meets specific business needs.

- Functional Testing may be less critical if the functionality is standardised.

- Non-Functional Testing ensures the application performs well in the existing environment.

Developing a Standard Product for Multiple Clients

-

- Functional Testing is key to verifying the application's functionality.

- Process Testing is less feasible as the specific business processes of future customers are unknown.

- Non-Functional Testing ensures the product meets performance and quality standards across diverse environments.

Migrating Platforms to the Cloud

-

- Non-Functional Testing is essential to assess performance, scalability, and other qualities in the new environment.

- Process Testing and Functional Testing may require minimal effort if functionality and processes remain unchanged.

In each scenario, while all three types of testing add value, the emphasis on one over the others depends on the project context. Lacking the necessary skills for the most beneficial type of testing exposes organisations to risk.

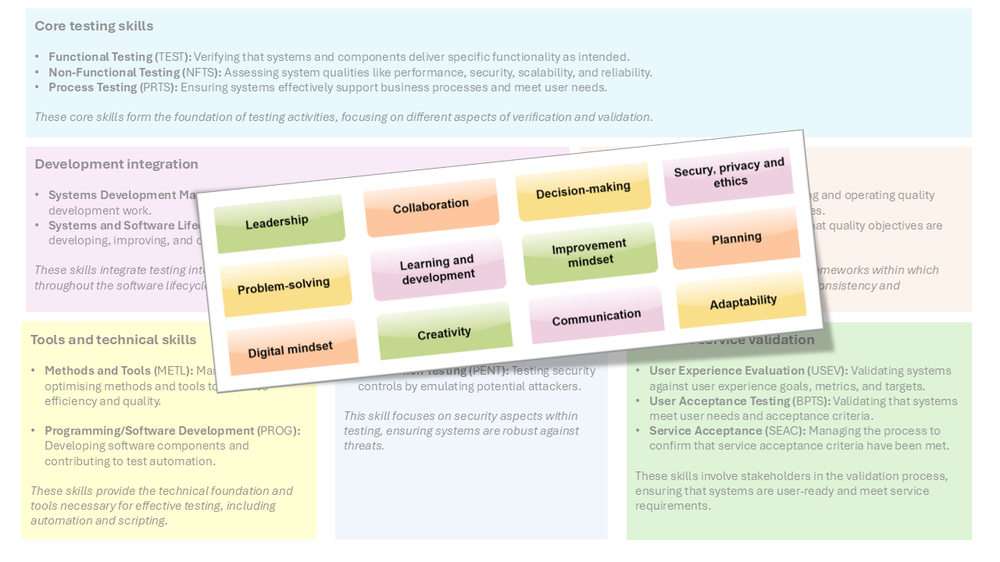

Greater emphasis on behavioural factors

In SFIA 9, greater emphasis is placed on the importance of behavioural factors, such as communication and collaboration, across all levels of responsibility. While technical competencies are crucial, behavioural factors play a key role in how effectively testing professionals perform their duties. The relevance and importance of these behavioural factors will vary based on the level of responsibility associated with a role and the nature of the work performed. SFIA is flexible, allowing behavioural factors to be selected and prioritised accordingly.

These factors are essential for responsibilities such as:

- working effectively within cross-functional teams

- building strong relationships with stakeholders

- identifying and mitigating risks and issues throughout the testing process

Business skills and behavioural factors should also be selected and prioritised alongside to complement and enhance technical skills.

Putting these together - SFIA provides a comprehensive framework to support testing professionals in their development, tailored to the specific responsibilities and contexts of their roles.

As way of introduction - here is a brief commentary on the possible application of each behavioural factor to testing professionals.

| Planning (PLAN) | Effective planning assists testers in organizing testing activities, managing resources and meeting project deadlines. Planning must be flexible and iterative to accommodate changing priorities, incorporating risk-based approaches and coordinating activities across different testing levels while considering dependencies and environment availability. |

| Problem-solving (PROB) | Testers regularly encounter defects, inconsistencies and unexpected behaviours in systems. Problem-solving skills enable them to diagnose issues, understand root causes and work with development teams to implement fixes. This includes reproducing complex issues under controlled conditions, analysing test data and metrics, recognising patterns of related issues, and applying root cause analysis techniques. |

| Decision-making (DECM) | Testers apply decision-making skills to prioritise tests, assess risks and select appropriate testing approaches. This extends to making informed decisions about test coverage, determining when to stop testing, balancing quality-time-resource trade-offs, and using metrics and test results for data-driven decisions. |

| Collaboration (COLL) |

Testing professionals work with team members to coordinate testing activities, share information and achieve project objectives. They also collaborate with other roles in the development process to build early feedback into activities, and work closely with stakeholders such as users and subject matter experts to ensure testing aligns with real needs. |

| Communication (COMM) | Clear communication helps testers report findings, discuss issues and ensure understanding among team members. This includes translating technical issues for non-technical stakeholders, writing clear and concise bug reports, effectively presenting test results and metrics, and appropriate escalation of critical issues. |

| Leadership (LEAD) | Leadership skills help senior testers guide teams, influence testing strategies and align activities with organisational goals. Leaders mentor and coach junior testers, build and maintain testing communities of practice, champion quality throughout the organization, and demonstrate the ability to influence without authority. |

| Learning and development (LADV) | Continuous learning allows testers to stay current with new testing methodologies, tools and industry standards. This may include cross-skilling in development and operations, learning from both failures and successes, actively sharing knowledge within teams, and staying informed about industry trends and best practices. |

| Improvement mindset (IMPM) | An improvement mindset leads testers to identify ways to enhance testing processes, increasing efficiency and effectiveness. Beyond this, testers provide valuable input into overall product and service improvement through their objective examination of both detailed and overall behaviours, highlighting areas of weakness and potential improvement beyond basic specification compliance. |

| Adaptability (ADAP) | Adaptability helps testers adjust to changing requirements, technologies and testing methods, maintaining effectiveness under varying conditions. This behaviour is particularly critical in modern iterative, adaptive or agile delivery methods where development products available for test often vary from day-to-day, making traditional phase-level planning less effective. |

| Digital mindset (DIGI) | AA digital mindset supports testers in using modern tools and technologies to enhance testing performance and productivity. This includes understanding DevOps practices and tools, awareness of security testing approaches, knowledge of test automation frameworks, and understanding GenAI and cloud technologies and their impact on testing. |

| Creativity (CRTY) | Creativity enables testers to design innovative test cases, uncovering defects that standard approaches might miss. This extends to thinking beyond standard test scenarios, creating innovative test data combinations, developing new approaches for emerging technologies, and finding ways to effectively test non-functional requirements. |

Frequently Asked Questions (FAQ)

Q: How does the updated framework address the skills required for functional testing?

A: The SFIA 8 Testing (TEST) skill has been rewritten to focus exclusively on Functional Testing, providing clearer guidance on the competencies required to assess functional requirements of products, systems, and services.

Q: What changes have been made to the way SFIA describes non-functional testing skills?

A: A new skill, Non-Functional Testing (NFTS), has been created to specifically address the assessment of performance, security, scalability, and other non-functional qualities against requirements or expected standards.

Q: How does the updated framework emphasise the importance of soft skills for testing professionals?

A: SFIA 9 emphasises soft skills by making them more visible within the framework, particularly through the generic attributes in the 'Business Skills/Behavioural factors' section. Testing professionals are encouraged to develop skills such as communication, collaboration, and problem-solving across all levels to work effectively within cross-functional teams and build strong stakeholder relationships.

Q: How can testing professionals use the updated framework to plan their career progression?

A: The updated framework provides clearer guidance on the skills and competencies required at each SFIA level. Testing professionals can map their current skills, identify areas for development, and set goals aligned with the framework. They may specialise in one of the testing domains or expand their skills across multiple areas, focusing on progressing within their chosen specialisms or exploring opportunities to broaden their expertise.

Q: How does SFIA support both generalist and specialist testing roles?

A: SFIA supports both roles by providing separate skills for Process Testing, Functional Testing, and Non-Functional Testing. Professionals can specialise in a specific area, developing deep expertise, or adopt a generalist role by developing a broad understanding of testing principles that apply across all specialisms. The consistent progression of responsibilities and skills across levels allows professionals to transition between domains or enhance their skills across multiple testing areas.

Q: Do professionals have to be test managers to progress to higher SFIA levels?

A: No, progression to higher SFIA levels does not necessarily require being a test manager in terms of managing a team. Higher levels involve responsibilities related to planning, leading, and managing testing activities and contributing to organisational policies and standards. Professionals can progress by demonstrating leadership, strategic thinking, and the ability to influence and guide testing practices within their organisation, regardless of their specific job title.

Q: Why aren't there standalone skills for test analysis, test design, and test management?

A: SFIA incorporates different phases of test development into the main testing skills to provide a comprehensive, adaptable, and progressive approach. This integration ensures that professionals have a holistic view of the testing process within their area of focus. It simplifies the framework by avoiding overlapping skills and keeps it manageable and easy to understand.

Q: There seems to be similar wording used for Functional, Non-Functional, and Process Testing. Why is that?

A: The similar wording reflects the consistent progression of responsibilities and skills across different testing domains. While the structure is consistent, each skill has specific focus areas and techniques. The differences are captured in the specific activities and focus areas mentioned in the skill descriptions and guidance notes.

Q: How does SFIA deal with the wide range of tools and techniques used by testing professionals?

A: SFIA acknowledges the importance of tools and techniques by encouraging professionals to adopt and adapt appropriate testing methods, automated tools, and techniques. The Methods and Tools (METL) skill can be used alongside testing skills to support roles responsible for assessing, selecting, implementing, and improving methods and tools. SFIA focuses on general principles rather than specific tools to maintain applicability across various technologies and industries.

Q: How does SFIA address test automation?

A: SFIA recognises the significance of test automation and incorporates it into skill descriptions at various levels. Professionals are expected to:

- At Lower Levels (1-2): Use basic automated testing tools and automate repeatable tasks.

- At Intermediate Levels (3-4): Implement scalable and reliable automated tests and frameworks, promoting productivity through automation and best practices.

- At Higher Levels (5-6): Develop organisational testing capabilities and methods, including advanced test automation strategies and frameworks.

This consistent emphasis encourages professionals to develop their skills in test automation as they advance in their careers.

Conclusion

The updates to testing skills in SFIA 9 reflect the evolving landscape of the testing profession. By redefining and restructuring testing into three distinct skills, SFIA provides clearer guidance for career progression, acknowledges the importance of specialised competencies, and aligns the framework with modern practices. Testing professionals can use this updated framework to plan their careers, develop necessary skills, and contribute effectively to their organisations.

The SFIA framework recommends that behavioural factors are prioritised alongside professional skills. Below is a brief commentary on the relevance of each behavioural factor to testing professionals:

An over view of testing-related skills in SFIA 9

Remember - Different testing roles at different levels will need different SFIA skills.

Business skills and behavioural factors should also be selected and prioritised alongside to complement and enhance technical skills.

Putting these together - SFIA provides a comprehensive framework to support testing professionals in their development, tailored to the specific responsibilities and contexts of their roles.

Methods and Tools (METL)

Definition:

Methods and Tools focus on leading the adoption, management, and optimisation of methods and tools, ensuring effective use and alignment with organisational objectives.

Key Responsibilities in Testing Context:

- Assessing, selecting, and implementing testing methods and tools that best fit the organisation's needs.

- Configuring and maintaining testing tools, frameworks, and environments.

- Providing guidance, support, and training to testing teams on the use of testing methods and tools.

- Developing and maintaining documentation and user guides for testing tools and methodologies.

- Evaluating the effectiveness of testing methods and tools and recommending improvements.

- Aligning testing methods and tools with organisational standards and good practices.

- Staying informed about industry developments related to testing methods and tools.

Examples:

- Selecting and implementing a new test automation framework to improve testing efficiency.

- Configuring continuous integration tools to integrate automated testing into the build process.

- Providing training sessions for testers on how to use a new performance testing tool.

- Developing guidelines and best practices for using test management tools across the organisation.

- Evaluating the effectiveness of existing testing tools and making recommendations for upgrades or replacements.

- Customising a test reporting tool to align with the organisation's reporting standards.

- Collaborating with other teams to integrate testing tools with development and deployment pipelines.

Note:

Not all testers need to have the Methods and Tools (METL) skill. If a tester is primarily using testing tools as part of their day-to-day activities without being involved in selecting, configuring, or optimising these tools, then METL is unlikely to be a required skill for them. METL is most relevant for roles that involve stewardship of testing methods and tools, such as test architects, tool specialists, or those responsible for defining testing processes and toolsets.

User Experience Evaluation (USEV)

Definition:

User Experience Evaluation focuses on validating systems, products, or services against user experience goals, metrics, and targets.

Key Responsibilities:

- Ensuring that user requirements for usability and accessibility are met.

- Applying evaluation techniques to assess usability, accessibility, and overall user satisfaction.

- Collaborating with stakeholders to improve user experience based on evaluation findings.

Examples:

- Conducting usability testing to identify areas of improvement in a mobile application.

- Evaluating a website's compliance with accessibility standards like WCAG.

- Gathering user feedback through surveys and interviews to refine product designs.

- Performing expert reviews to assess the intuitiveness of a software interface.

- Testing the user experience of a new feature before its release to ensure it meets user expectations.

- Assessing the ease of navigation and information architecture in an online platform.

- Validating that a product meets user experience goals related to efficiency and satisfaction.

Penetration Testing (PENT)

Definition:

Penetration Testing focuses on testing the effectiveness of security controls by emulating the tools and techniques of likely attackers.

Key Responsibilities:

- Conducting ethical hacking to identify and exploit security vulnerabilities.

- Evaluating the effectiveness of current security defences and mitigation controls.

- Testing networks, systems, and applications for weaknesses and vulnerabilities.

- Reporting on findings and providing recommendations for remediation.

- Assessing the business risks associated with identified security issues.

Examples:

- Performing penetration tests on a web application to uncover SQL injection vulnerabilities.

- Simulating cyber-attacks to test an organization's incident response capabilities.

- Assessing the security of wireless networks by attempting unauthorized access.

- Testing the robustness of encryption implementations in a messaging application.

- Conducting social engineering exercises to evaluate susceptibility to phishing attacks.

- Identifying misconfigurations in firewall settings that could be exploited by attackers.

- Providing detailed reports outlining security weaknesses and recommending fixes.

Service Acceptance (SEAC)

Definition:

Service Acceptance focuses on managing the process to obtain formal confirmation that service acceptance criteria have been met.

Key Responsibilities:

- Defining and documenting service acceptance criteria, including both functional and non-functional requirements.

- Conducting service acceptance testing and evaluating results against the defined criteria.

- Ensuring operational readiness for new or changed services before deployment.

- Collaborating with stakeholders to validate that the service meets required standards.

- Documenting and reporting on service acceptance outcomes and any necessary improvements.

Examples:

- Defining acceptance criteria for a new IT service to ensure it meets performance and security standards.

- Coordinating with service providers to confirm all support processes are in place before launching a service.

- Evaluating test results to verify that a cloud service meets agreed-upon availability and scalability requirements.

- Ensuring that a new software application is ready for production by validating operational support and maintenance plans.

- Documenting the outcomes of service acceptance testing and providing feedback for enhancements.

- Confirming that a newly implemented network infrastructure complies with regulatory requirements before go-live.

- Collaborating with DevOps teams to integrate service acceptance practices into continuous delivery pipelines.

User Acceptance Testing (BPTS)

Definition:

User Acceptance Testing focuses on validating systems, products, business processes, or services to determine whether the acceptance criteria have been satisfied.

Key Responsibilities:

- Collaborating with stakeholders to define clear entry and exit criteria for acceptance testing.

- Designing and specifying test cases and scenarios based on acceptance criteria.

- Facilitating the execution of acceptance tests by end-users or business representatives.

- Ensuring that the system meets required business needs and delivers the predicted business benefits.

- Analysing and reporting on test activities, results, issues, and risks.

Examples:

- Coordinating with business users to perform acceptance testing of a new software application before deployment.

- Defining acceptance criteria for a system upgrade and verifying that these criteria are met.

- Organising and managing user acceptance testing sessions for a new CRM system implementation.

- Collecting feedback from users during acceptance testing and ensuring issues are addressed.

- Ensuring that a new financial reporting system meets regulatory requirements as part of acceptance testing.

- Validating that a new mobile application provides the expected user experience and functionality as agreed upon.

Relationship between process testing and user acceptance testing

User Acceptance Testing (BPTS):

-

- Conducted by: End-users or business stakeholders.

- Focus: Validating that the system meets user needs and acceptance criteria from a user perspective.

- Objective: Ensuring the system is fit for purpose and ready for deployment.

Process Testing (PRTS):

-

- Conducted by: Testing professionals within the development or quality assurance team.

- Focus: Verifying that the system supports required business processes and workflows.

- Objective: Ensuring the system functions correctly from a technical and process standpoint before it reaches users.

There are currently no items in this folder. You may see more when you log in.